For those who have followed my in the last few years know that I am a big fan of Layer 3 routing, BGP, VXLAN and EVPN. In networks I design I try to eliminate the use of Layer 2 as much as possible.

Although I think VXLAN is great, it still creates a virtual Layer 2 domain where hosts exist. Multicast and broadcast traffic are still required for Neighbor Discovery in IPv6 and ARP in IPv4. This is not always ideal. EVPN is also not simple, as it can be complex to set up and maintain. Even so, I would choose EVPN with VXLAN over any Layer 2 network any day.

Layer 3 routing

My goal was to see if I could remove Layer 2 entirely and use pure Layer 3 routing for my virtual machines. This requires routing single host IPv4 and IPv6 addresses directly to the virtual machines, without any shared Layer 2 domain.

I came across Redistribute Neighbor in Cumulus Linux, which uses a Python daemon called rdnbrd. This daemon intercepts IPv4 ARP packets from hosts and injects them as single host IPv4 routes into the BGP routing table.

Could this also work for virtual machines and with IPv6? Yes!

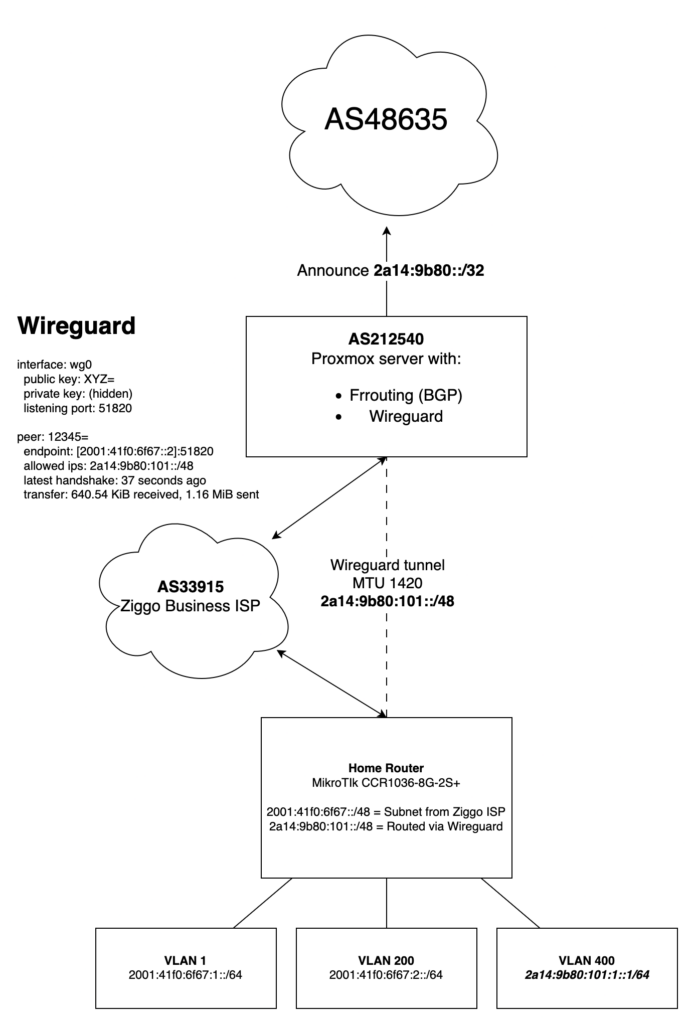

Over several months I spoke with various people at conferences, read a number of online articles and used these pieces of information to build a working prototype on my Proxmox server, which runs BGP.

/32 and /128 towards a VM

In the end it wasn’t that difficult. I started with creating a Linux bridge on my Proxmox node where I would configure two addresses, 169.254.0.1/32 for IPv4 and fe80::1/64 for IPv6. This is how it looks like in the /etc/network/interfaces file.

auto vmbr1

iface vmbr1 inet static

address 169.254.0.1/32

address fe80::1/64

bridge-ports none

bridge-stp off

bridge-fd 0The webserver running this WordPress blog was reconfigured and attached to this bridge. Inside the Virtual Machine there is Ubuntu Linux with netplan and this is what I ended up configuring in /etc/netplan/network.yaml

network:

ethernets:

ens18:

accept-ra: no

nameservers:

addresses:

- 2620:fe::fe

- 2620:fe::9

addresses:

- 2.57.57.30/32

- 2001:678:3a4:100::80/128

routes:

- to: default

via: fe80::1

- to: default

via: 169.254.0.1

on-link: true

version: 2Here you can see that I configured two addresses (2.57.57.30/32 and 2001:678:3a4:100::80/128) and manually configured the IPv4 and IPv6 gateways.

root@web01:~# fping 169.254.0.1

169.254.0.1 is alive

root@web01:~# fping6 fe80::1%ens18

fe80::1%ens18 is alive

root@web01:~#The VM can reach both the gateways, great! You can also see that these are set as the default gateway and the addresses have been configured on the interface ens18.

root@web01:~# ip addr show dev ens18 scope global

2: ens18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:02:45:76:d2:35 brd ff:ff:ff:ff:ff:ff

altname enp0s18

inet 2.57.57.30/32 scope global ens18

valid_lft forever preferred_lft forever

inet6 2001:678:3a4:100::80/128 scope global

valid_lft forever preferred_lft forever

root@web01:~# root@web01:~# ip -6 route show

::1 dev lo proto kernel metric 256 pref medium

2001:678:3a4:100::80 dev ens18 proto kernel metric 256 pref medium

fe80::/64 dev ens18 proto kernel metric 256 pref medium

default via fe80::1 dev ens18 proto static metric 1024 pref medium

root@web01:~# ip -4 route show

default via 169.254.0.1 dev ens18 proto static onlink

root@web01:~# Routing on the Proxmox node

On the Proxmox node I now needed to add these routes and the Neighbors into the ARP (IPv4) and NDP (IPv6) tables based on the MAC address, this resulted in these commands to be executed:

ip -6 route add 2001:678:3a4:100::80/128 dev vmbr1

ip -6 neigh add 2001:678:3a4:100::80 lladdr 52:02:45:76:d2:35 dev vmbr1 nud permanent

ip -4 route add 2.57.57.30/32 dev vmbr1

ip -4 neigh add 2.57.57.30 lladdr 52:02:45:76:d2:35 dev vmbr1 nud permanentIt required manual execution of these commands, but for a production environment you would need to have some form of automation who does this for you.

On my Proxmox node there is the FRRouting BGP daemon running which now picks up these routes and advertises them to the upstream router:

hv-138-a12-26# sh bgp neighbors 2001:678:3a4:1::50 advertised-routes 2001:678:3a4:100::80/128

BGP table version is 25, local router ID is 2.57.57.4, vrf id 0

Default local pref 100, local AS 212540

BGP routing table entry for 2001:678:3a4:100::80/128, version 22

Paths: (1 available, best #1, table default)

Advertised to non peer-group peers:

2001:678:3a4:1::50

Local

:: from :: (2.57.57.4)

Origin incomplete, metric 1024, weight 32768, valid, sourced, best (First path received)

Last update: Fri Nov 28 22:52:35 2025

Total number of prefixes 1

hv-138-a12-26# sh ip bgp neighbors 2001:678:3a4:1::50 advertised-routes 2.57.57.30/32

BGP table version is 11, local router ID is 2.57.57.4, vrf id 0

Default local pref 100, local AS 212540

BGP routing table entry for 2.57.57.30/32, version 9

Paths: (1 available, best #1, table default)

Advertised to non peer-group peers:

2001:678:3a4:1::50

Local

0.0.0.0 from 0.0.0.0 (2.57.57.4)

Origin incomplete, metric 0, weight 32768, valid, sourced, best (First path received)

Last update: Fri Nov 28 22:52:47 2025

Total number of prefixes 1

hv-138-a12-26#This makes the upstream aware of these routes and establishes connectivity.

VM mobility

This example is just a single Proxmox node, but this could easily work in a clustered environment. Using automation you would need to make sure the routes and ARP/NDP entries ‘follow’ the VM as it migrates to a different host.

This could be achieved using Hookscripts in Proxmox for example, but this is something I haven’t researched.

This blogpost is primarily to show that this is technically possible and it’s up to you on how to implement this into your environment should you want to do so.