When it comes down to storage under Linux you have a lot of great options if you are looking for local storage, but what if you have so much data that local storage is not really an option? And what if you need multiple servers accessing the data? You’ll probably take NFS or iSCSI with a clustered filesystem like GFS or OCFS2.

When using NFS or iSCSI it will come down to one, two or maybe three servers storing your data, where one will have a primary role for 99.99% of the time. That is still a Single Point-of-Failure (SPoF).

Although this worked (and still is) fine, we are running into limitations. We want to store more and more data, we want to expand without downtime and we want expansion to go smoothly. Doing all that under Linux now is a ……. Let’s say: Challenge.

Energy costs are also rising, if you like it or not, it does influence the work of a system administrator. We were used to having a Active/Passive setup, but that doubles your energy consumption! In large environments that could mean a lot of money. Do we still want that? I don’t think so.

Distributed storage is what we need, no central brain, no passive nodes, but a fully distributed and fault tolerant filesystem where every node is active and it has to scale easily without any disruption in service.

I think it’s nearly there and they call it Ceph!

Ceph is a distributed file system build on top of RADOS, a scalable and distributed object store. This object store simply stores objects in pools (which some people might refer to as “buckets”). It’s this distributed object store which is the basis of the Ceph filesystem.

RADOS works with Object Store Daemons (OSD). These OSDs are a daemon which have a data directory (btrfs) where they store their objects and some basic information about the cluster. Typically a data directory of a OSD is a one hard disk formatted with btrfs.

Every pool has a replication size property, this tells RADOS how many copies of an object you want to store. If you choose 3 every object you store on that pool will be stored on three different OSDs. This provides data safety and availability, loosing one (or more) OSDs will not lead to data loss nor unavailability.

Data placement in RADOS is done by CRUSH. With CRUSH you can strategically place your objects (and it’s replica’s) in different rooms, racks, rows and servers. One might want to place the second replica on a separate power feed then the primary replica.

A small RADOS cluster could look like this:

This is a small RADOS cluster, three machines with 4 disks each and one OSD per disk. The monitor is there to inform the clients about the cluster state. Although this setup has one monitor, these can be made redundant by simple adding more (odd number is preferable).

With this post I don’t want to tell you everything about RADOS and the internal working, all this information is available on the Ceph website.

What I do want to tell you is how my experiences are with Ceph at this point and where it’s heading.

I started testing Ceph about 1.5 years ago, I stumbled on it when reading the changelog of 2.6.34, that was the first kernel where the Ceph kernel client was included.

I’m always on a quest to find a better solution for our storage, right now we are using Linux boxes with NFS, but that is really starting to hurt in many ways.

Where did Ceph get in the past 18 months? Far! I started testing when version 0.18 just got out, right now we are at 0.31!

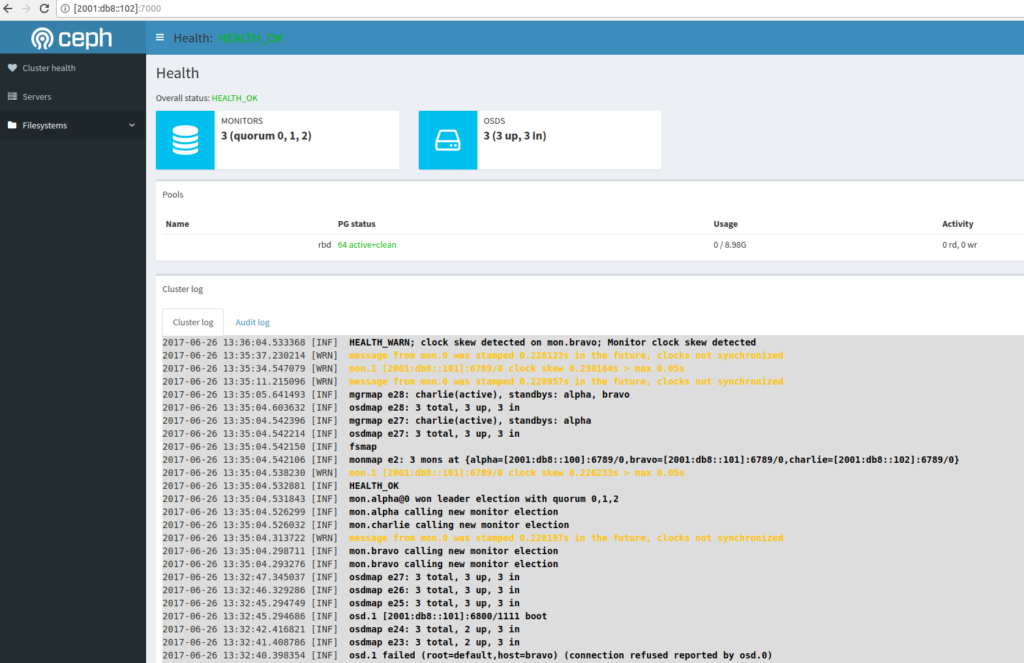

I started testing the various components of Ceph, started on a small number of virtual machines, but currently I have two clusters running, a “semi-production” where I’m running various virtual machines with RBD and Qemu-KVM. My second cluster is a 74TB cluster with 10 machines, each having 4 2TB disks.

Filesystem Size Used Avail Use% Mounted on

[2a00:f10:113:1:230:48ff:fed3:b086]:/ 74T 13T 61T 17% /mnt/ceph

As you can see, I’m running my cluster over IPv6. Ceph does not support dual-stack, you will have to choose between IPv4 or IPv6, where I prefer the last one.

But you are probably wondering how stable or production ready it is? That question is hard to answer. My small cluster where I run the KVM Virtual Machines (through Qem-KVM with RBD) has only 6 OSDs and a capacity of 600GB. It has been running for about 4 months now without any issues, but I have to be honest, I didn’t stress it either. I didn’t kill any machines nor did hardware fail. It should be able to handle those crashes, but I haven’t stressed that cluster.

The story is different with my big cluster. In total it’s 15 machines, 10 machines hosting a total of 40 OSDs, the rest are monitors, meta data servers and clients. It started running about 3 months ago and since I’ve seen numerous crashes. I also chose to use the WD Green 2TB disks in my cluster, that was not the best decision. Right now I have a 12% failure rate of these disks. While the failure of those disks is not a good thing, it is a good test for Ceph!

Some disk failures caused some serious problems causing the cluster to start bouncing around and never recovering from that.. But, about 2 days ago I noticed two other disks failing and the cluster fully recovered from it while a rsync was writing data to it. So, it seems to be improving!

During my further testing I have stumbled upon a lot of things. My cluster is build with Atom CPU’s, but those seem to be a bit underpowered for the work. Recovery is heavy for OSDs, so whenever something goes wrong in the cluster I see the CPU’s starting to spike towards the 100%. This is something that is being addressed.

Data placement goes in Placement Group’s, aka PGs. The more data or OSDs you add to the cluster, the more PGs you’ll get. The more PGs you have, the more memory your OSDs start to consume. My OSDs have 4GB (Atom limitation) each. Recovery is not only CPU hungry, but it will also eat your memory. Although the use of tcmalloc reduced the memory usage, OSDs sometimes use a lot of memory.

To come to some sort of a conclusion. Are we there yet? Short answer: No. Long answer: No again, but we will get there. Although Ceph still has a long way to go, it’s on the right path. I think that Ceph will become the distributed storage solution under Linux, but it will take some time. Patience is the key here!

The last thing I wanted to address is the fact that testing is needed! Bugs don’t reveal themselves you have to hunt them down. If you have spare hardware and time, do test and report!