In the past years I’ve said goodbye to hardware RAID controllers and mainly relied on software solutions like mdadm, LVM, Ceph and ZFS(-on-Linux) for keeping data safe.

At PCextreme we use hypervisors with local NVMe storage running in Linux’s mdadm software RAID-10. This works great! But I wasn’t satisfied with the performance for a few reasons:

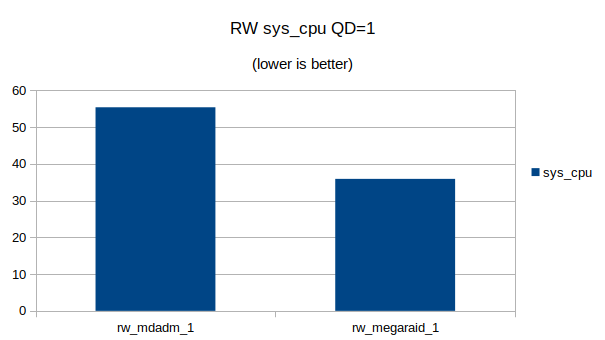

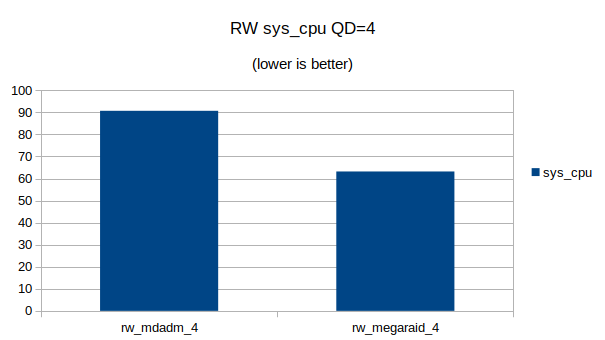

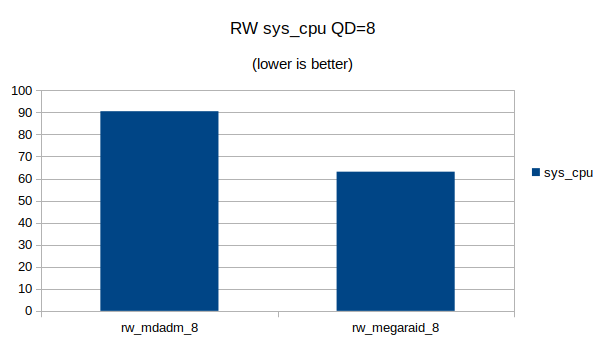

- It is expensive on the CPUs (Dual AMD Epyc 48-core)

- It’s not super fast

We mainly use the Samsumg PM983 (1.92TB) devices and I started to look around if there is a hardware solution which could offload the RAID computation to a dedicated SoC so it wouldn’t eat up our CPU cycles.

After searching I found the Broadcom SAS3916 chip which is on the MegaRAID 9516-16i controller from Broadcom. This chipset supports NVMe devices in various RAID modes.

I wanted to benchmark Linux’s software RAID against the Broadcom controller to see if it would be faster and save us the expensive CPU cycles.

With mdadm we also looked into RAID-5/6 to have more usable space. We however found out that this eats up so many CPU cycles that it wasn’t feasible to use in production for our purposes.

Benchmarking setup

- Ubuntu Linux 18.04 with kernel 5.3

- SuperMicro 1114S-WN10RT

- AMD Epyc 7302P 16-core CPU

- 128GB Memory

- 4x Samsung PM983 1.92TB

- Broadcom MegaRAID 9516-i

Benchmarking will be done using fio and the main elements we are looking for:

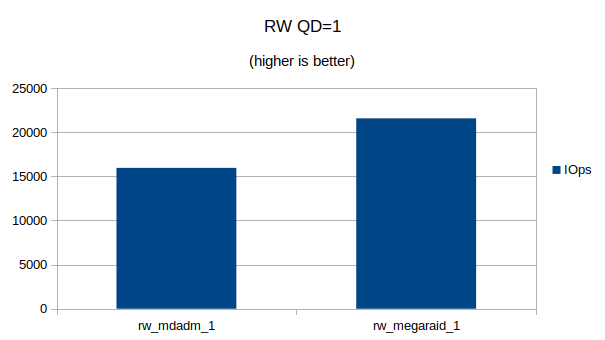

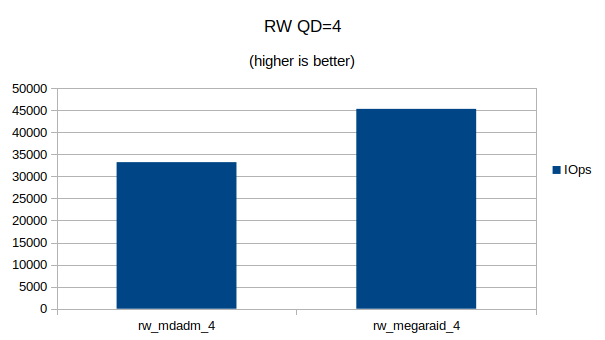

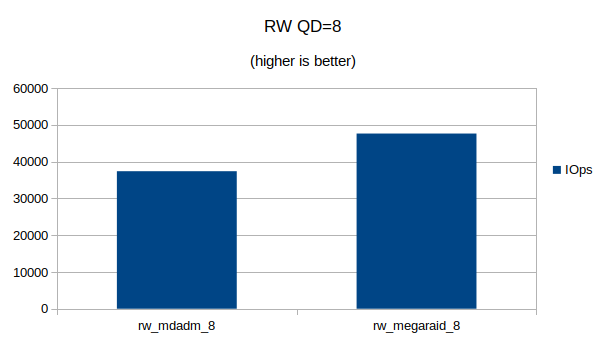

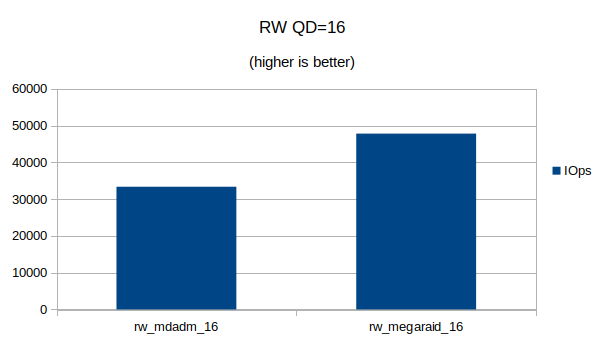

- QD=1, 4, 8 and 16 4k random write performance

- CPU utilization during benchmarks

MegaRAID configuration

The RAID-10 array was created using storcli

storcli64 /c0 add vd type=raid10 drives=252:4,6,8,10 pdperarray=2

mdadadm setup

RAID-10 was set-up using this command:

mdadm --create --level=10 --raid-devices=4 /dev/md0 /dev/nvme0n1 /dev/nvme1n1 /dev/nvme2n1 /dev/nvme3n1

fio

The fio jobs we ran to benchmark:

[global] ioengine=libaio direct=1 invalidate=1 bs=4k runtime=300 filename=/var/lib/libvirt/images/fio size=64g rw=randwrite numjobs=1 [rw_mdadm_1] iodepth=1 [rw_mdadm_4] iodepth=4 [rw_mdadm_8] iodepth=8 [rw_mdadm_16] iodepth=16 [rw_mdadm_32] iodepth=32

Results

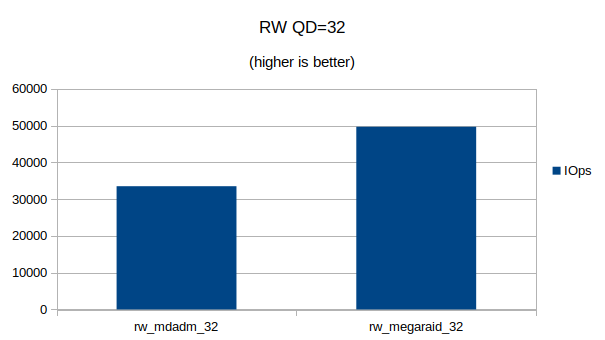

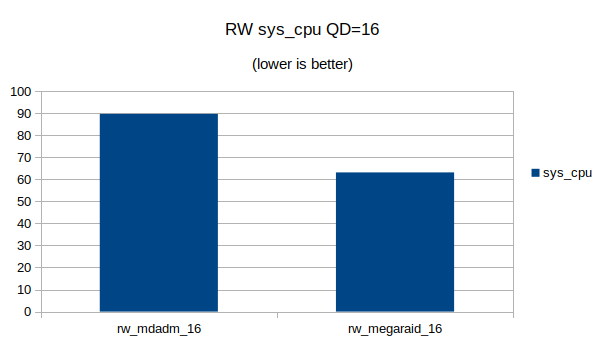

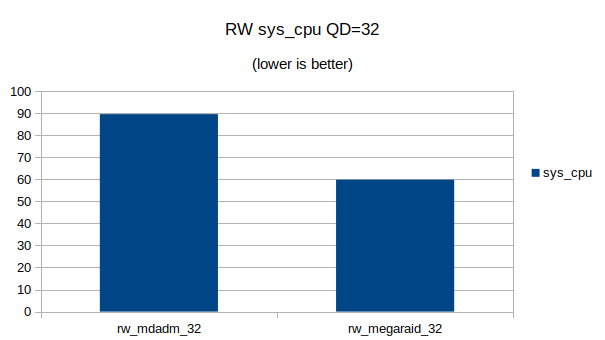

Short answer? The MegaRAID controller is much faster in RAID-10 mode and also saves up to 30% of CPU cycles on the Linux machine.

You can also download the JSON output from fio here:

In addition you can download my Open Office spreadsheet I used to generate the graphs and process the data.

Graphs

Some graphs with the results of the IOps and CPU utilization.