At PCextreme we have a 700TB Ceph cluster which is used behind our public cloud Aurora Compute which runs Apache CloudStack.

Ceph health

One of the things we monitor of the Ceph cluster is it’s health. This can be OK, WARN or ERR. It speaks for itself that you always want to see OK, but things do go wrong. Disks fail, machines die, kernel panics happen. Stuff goes wrong.

I thought it was a cool idea to buy a used real traffic light which I could install at the office. OK would be green, WARN would be orange/amber and ERR would be red.

2nd hand Trafficlight

Some searching on the internet brought me to trafficlightshop.com. They sell used (Dutch) traffic lights. I bought a Vialis 2230 (The largest on the picture below).

For EUR 75,00 I got my hands on a original trafficlight!

Controlling the lights

When I got the trafficlight it was already equipped with LED lights which work on 230V. A 30cm cable (cut off) was sticking out with 4 wires in it:

- Blue: Neutral

- Green: Phase/Positive for Green

- Yellow: Phase/Positive for Orange/Amber

- Red: Phase/Positive for Red

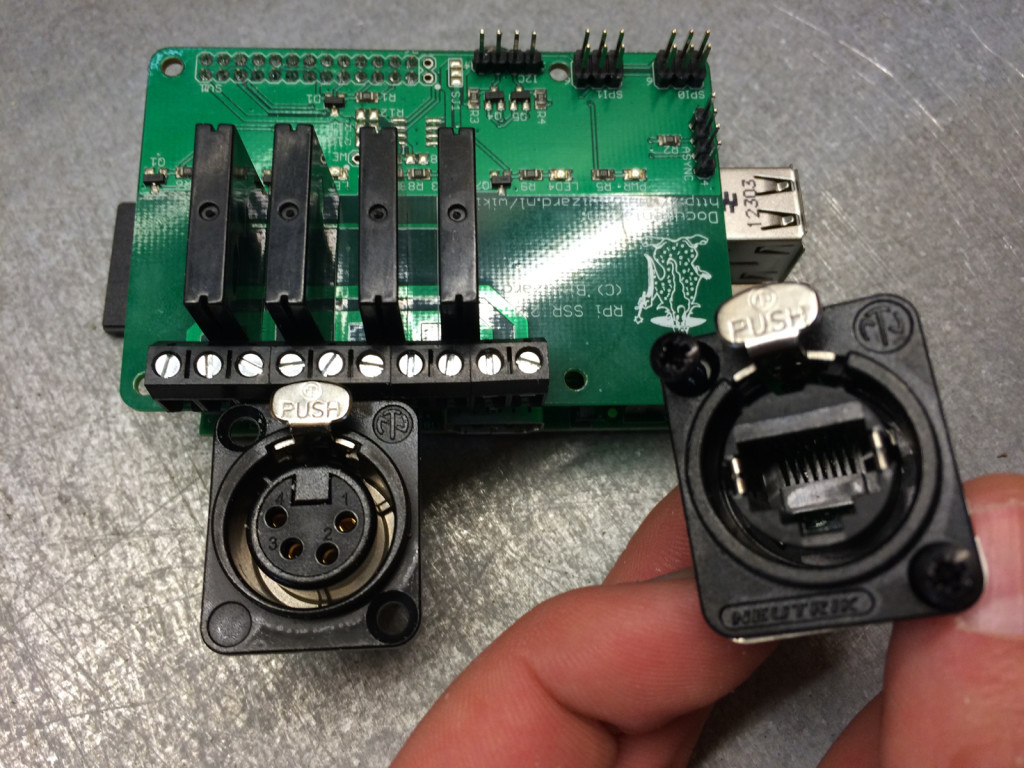

It was easy. All I had to do was buy a add-on board for a Raspberry Pi so I could control the lights.

Solid State Relay

My search for a add-on board brought me to BitWizard.nl, they make all kinds of add-on boards for the Raspberry Pi.

One of them is a SSR (Solid State Relay) board which has 4 outputs. Their wiki explained that it was very simple to control the Relays using Python.

A quick test at my desk at home brought be to a working setup.

Addition components

After writing the code which controls the light it was time to buy some housing where I could install it in.

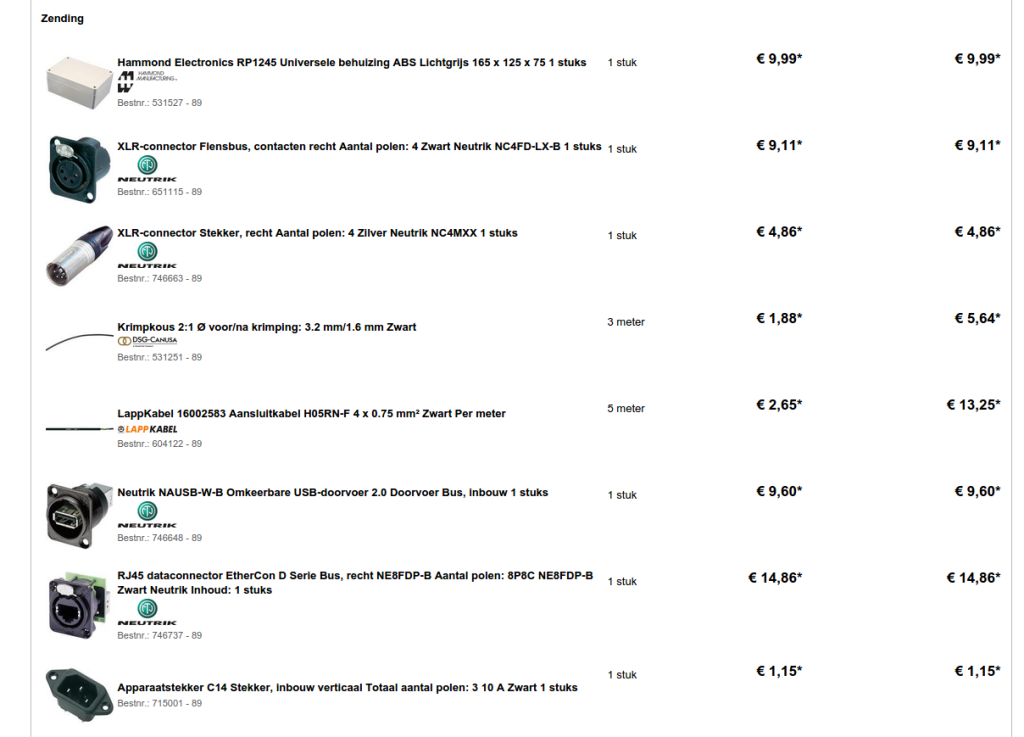

At Conrad I found the things I needed. A housing, some connectors and some cabling. A overview of my order:

This was needed since I would install it at the office and it needed to be safe. You don’t want somebody to get shocked by 230V. That’s kind of dangerous.

Bringing it together

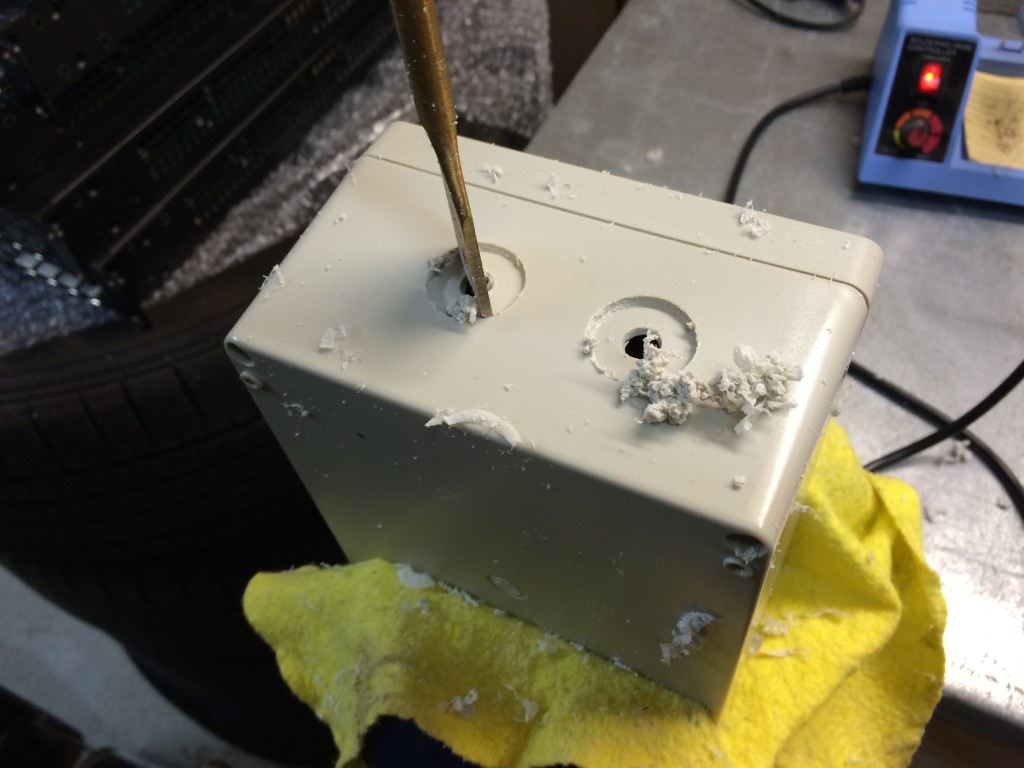

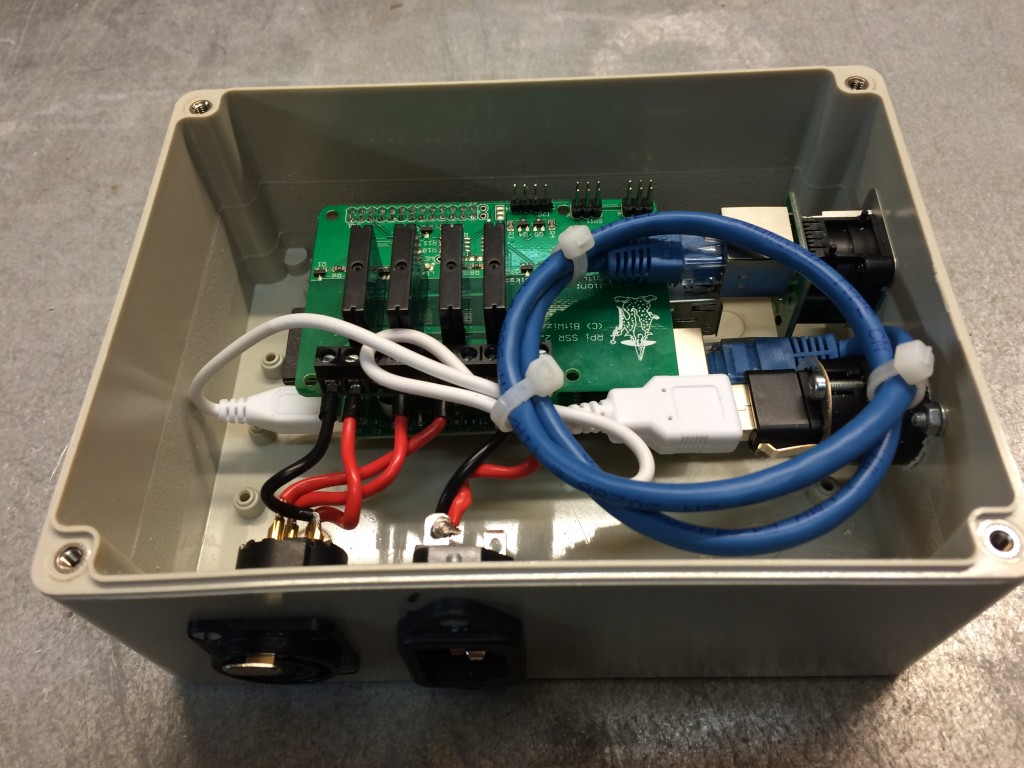

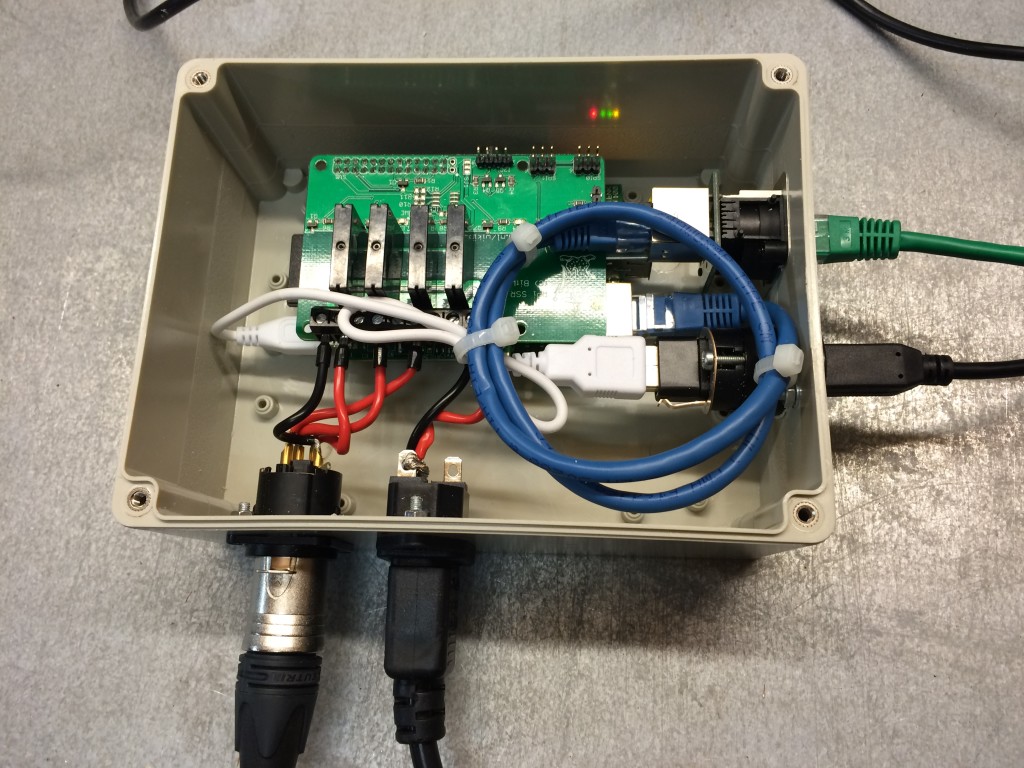

It was time to start drilling and soldering! In my shed it looked like this:

And a few more pictures of building it. Took me about 3 hours to complete.

At the office

The next day it was time to install it at the office! Some drilling and the result:

Health OK: Green

Health WARN: Amber/Orange

Health ERR: Red

No picture! We can trigger a WARN state in Ceph without service interruptions, but not a ERR state.

The code

The Python code I wrote is all on Github. It’s just some Python code which polls our Ceph dashboard every second. If the status changes it also changes the traffic light.