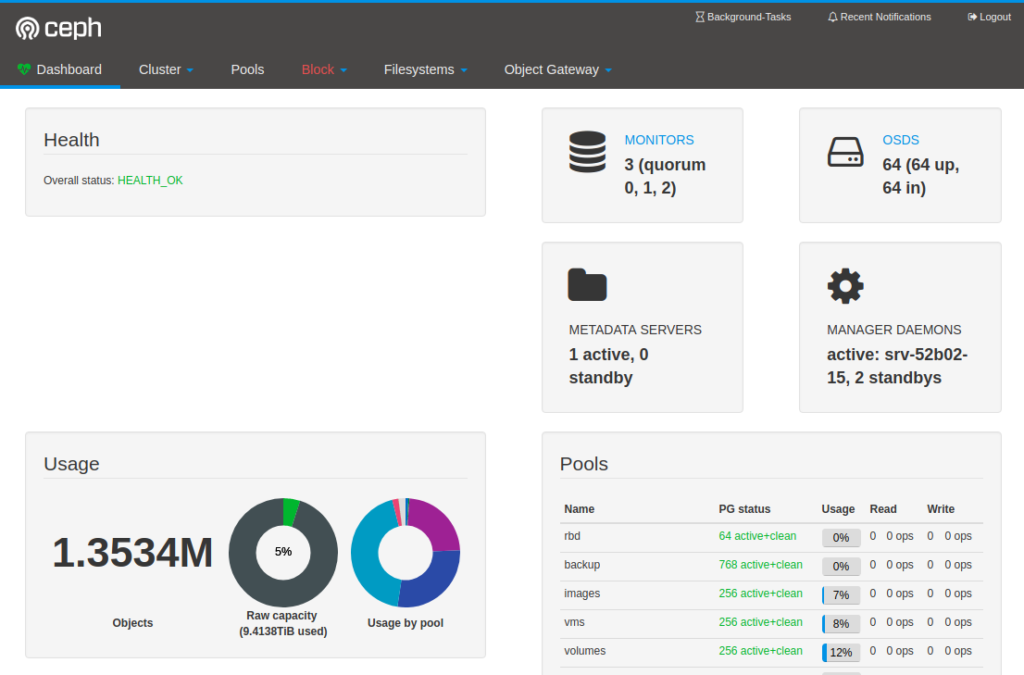

The Ceph Mgr dashboard plugin allows for an easy dashboard which can show you how your Ceph cluster is performing.

In certain situations you can’t contact the Mgr daemons directly and you have to place a Proxy server between your computer and the Mgr daemons.

This can be done easily with HAProxy and the following configuration which assumes that:

- SSL has been disabled in the Dashboard plugin

- Dashboard plugin listens in port 8080

- Mgr is running on the hosts mon01, mon02 and mon03

global log 127.0.0.1 local1 log 127.0.0.1 local2 notice chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats defaults log global mode http retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 option httplog no option httpclose no option http-server-close no option forceclose stats enable stats hide-version stats refresh 30s stats show-node stats uri /haproxy?stats stats auth admin:haproxy frontend https bind *:80 default_backend ceph-dashboard backend ceph-dashboard balance roundrobin option httpchk GET / http-check expect status 200 server mon01 mon01:8080 check server mon02 mon02:8080 check server mon03 mon03:8080 check

You can now point your browser to the URL/IP of your HAProxy and use your Ceph dashboard.

In case a Mgr machine fails the health checks of HAProxy will make sure it fails over to on of the other Mgr daemons.