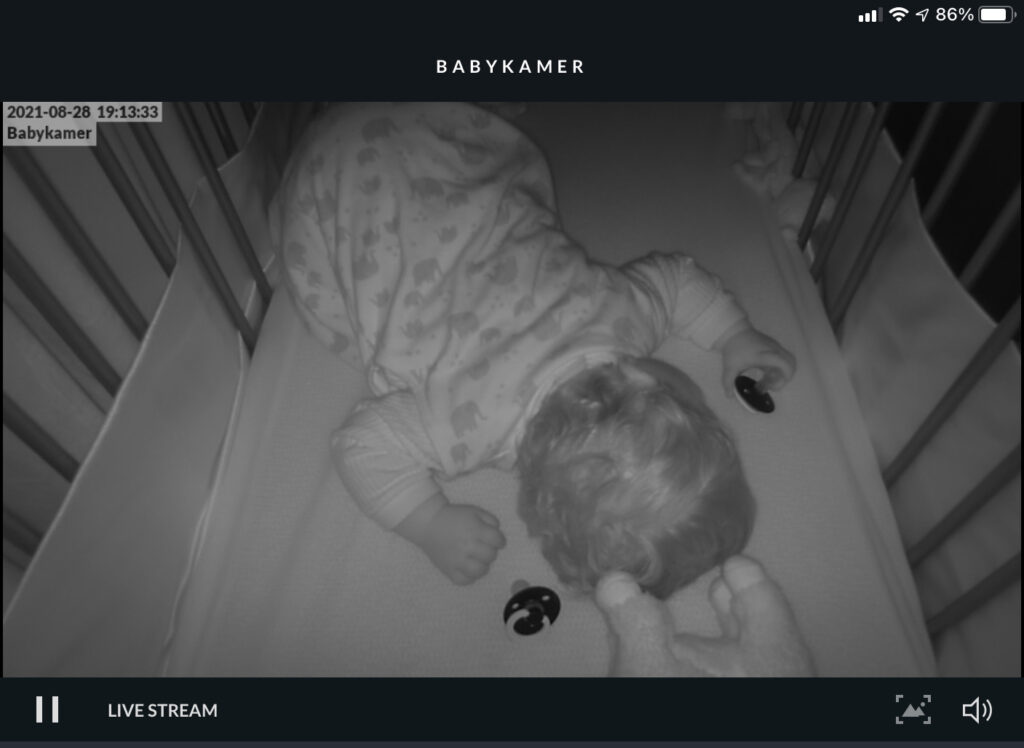

It’s been almost a year that I’ve been living in my new house and proud father of a son.

Almost every parent wants to watch their little on through a camera to see them sleeping in their bed.

There are many, many, many baby monitors on the market but in my experience they are all crap. Our house is build with a lot of concrete and none of them was able to penetrate the walls in our house and thus have a reliable video connection.

I also tried some IP/WiFi based baby monitors from for example Foscam, but these are all cheap Chinese cameras and didn’t work either. Crashing iPad apps, sending random (UDP) packets to China, half-baked UIs, etc, etc. They were all very low quality.

After a lot of frustrating I bought a Unifi Video G3 (UVC-G3-BULLET) camera and mounted it on the bed of my son.

I am using multiple Unifi video cameras around my house using Unifi Video, so for me it was just a matter of adding an additional camera to my Unifi system.

RTMP + HLS with Nginx

I also experimented with building a video stream using RTMP, ffmpeg and HLS with Nginx.

The Unifi cameras can export a RTSP stream which you can set up to live stream with Nginx. I tried this and it works for me, but with a delay of ~20s I thought it was not usable on my use-case.

It does however allow for a very easy way of building your own webcam!