Our house (2020) is being heated and cooled by a Stiebel Eltron WPL20AC heatpump. Being a techy I searched if there was a possibility to extract some statistics out of the heat pump and connect it to my Home Assistant.

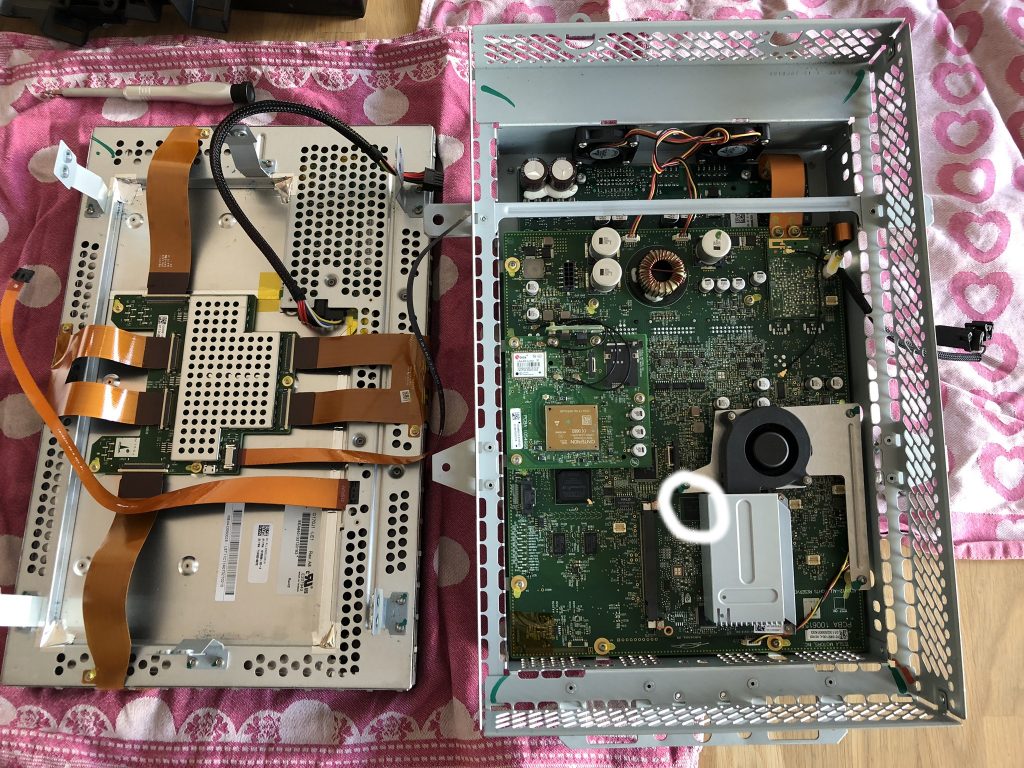

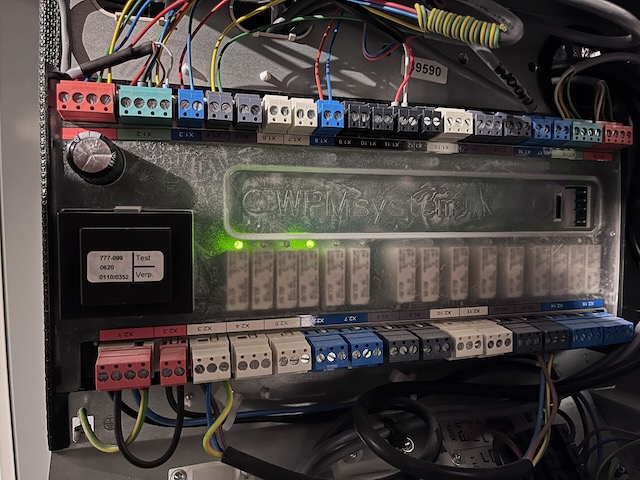

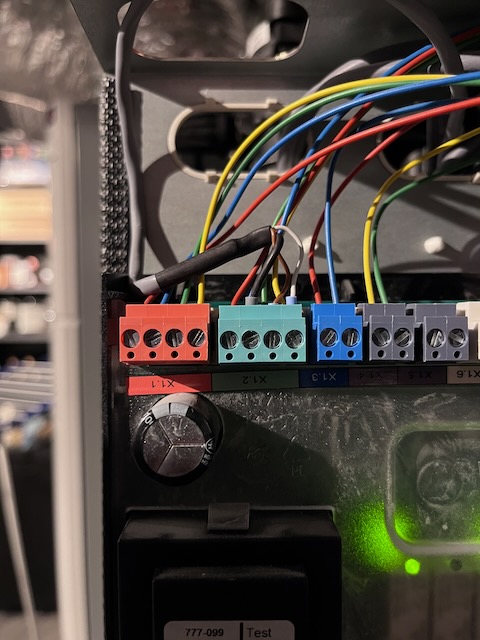

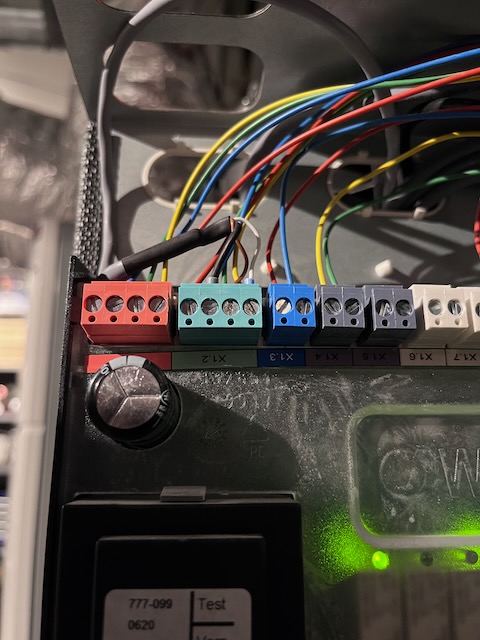

The pictures above show the system while being installed in the summer of 2020.

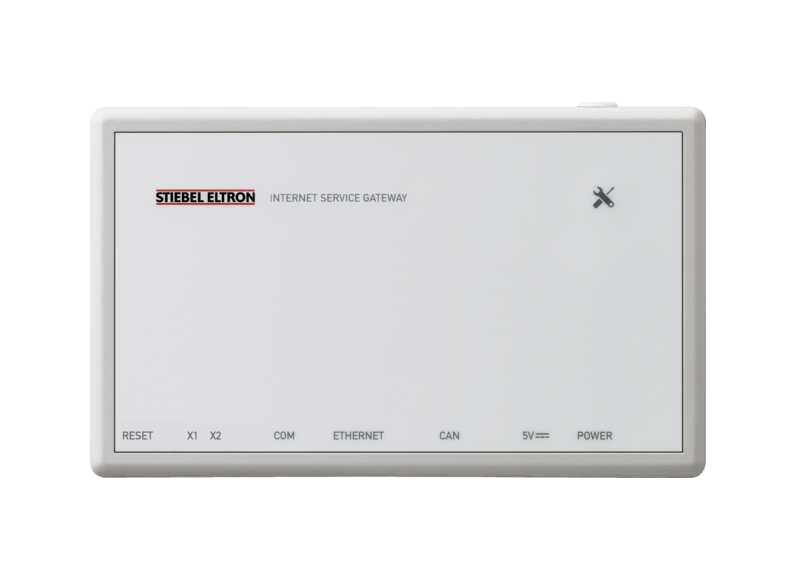

ISG web

Fast forward to 2024 and after some searching I found the ISG web from Stiebel Eltron.

A device which connects to the CAN bus of the heatpump and exposes the information via a Web UI and additionally via Modbus TCP/IP (additional software required!).

I purchased a ISG web (EUR 170) and tried to connect it myself.

I thought it was a matter of connecting the Ethernet and then the CAN bus cable on the WPsystem board inside the heatpump. Turned out everything on the board was occupied and the manuals of Stiebel Eltron were not very clear.

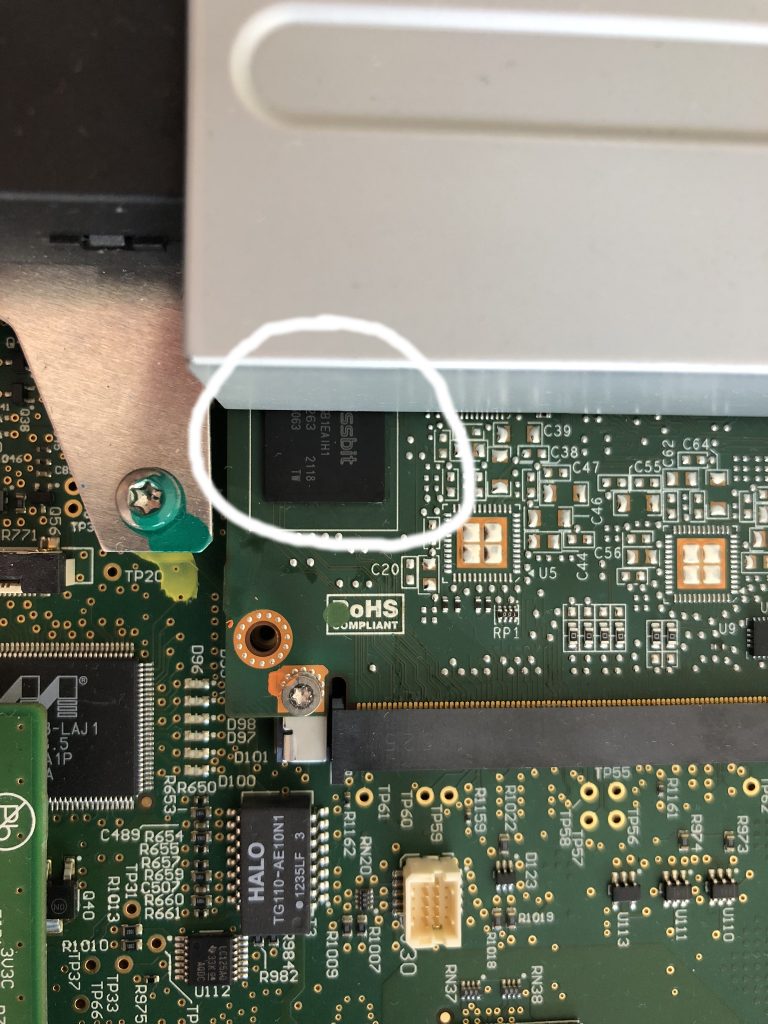

CAN bus connection

Luckily we had a servicemen come over for some maintenance on the heatpump and I asked him to connect the ISG to the WPsystem board. He did by connecting the cables to the GREEN (CAN B) connection X1.2.

Here you can see the grey cable coming from the ISG web connected to the X1.2 connector in parallel.

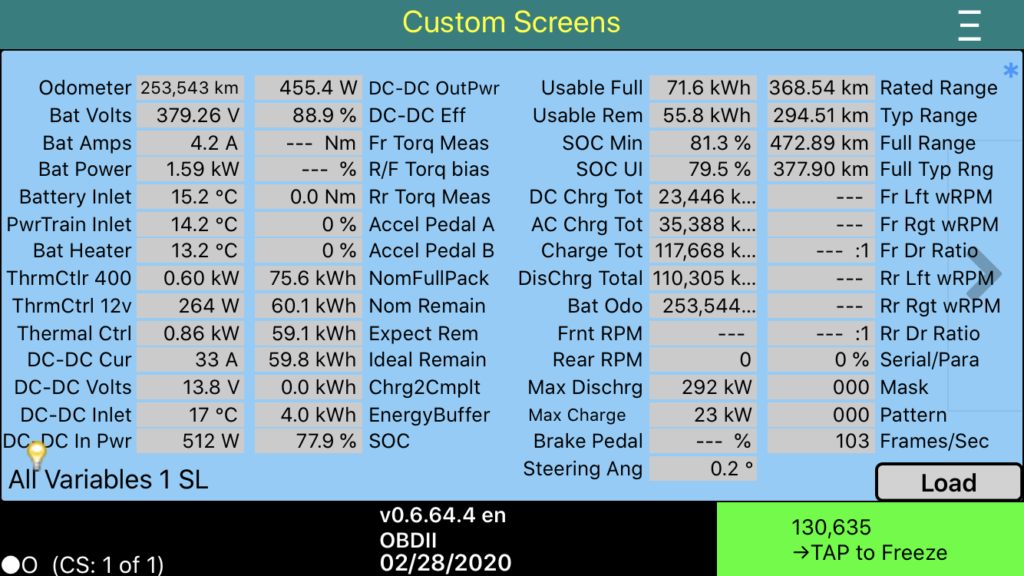

ISG web UI

The ISG is now working and I can see the information in the Web UI.

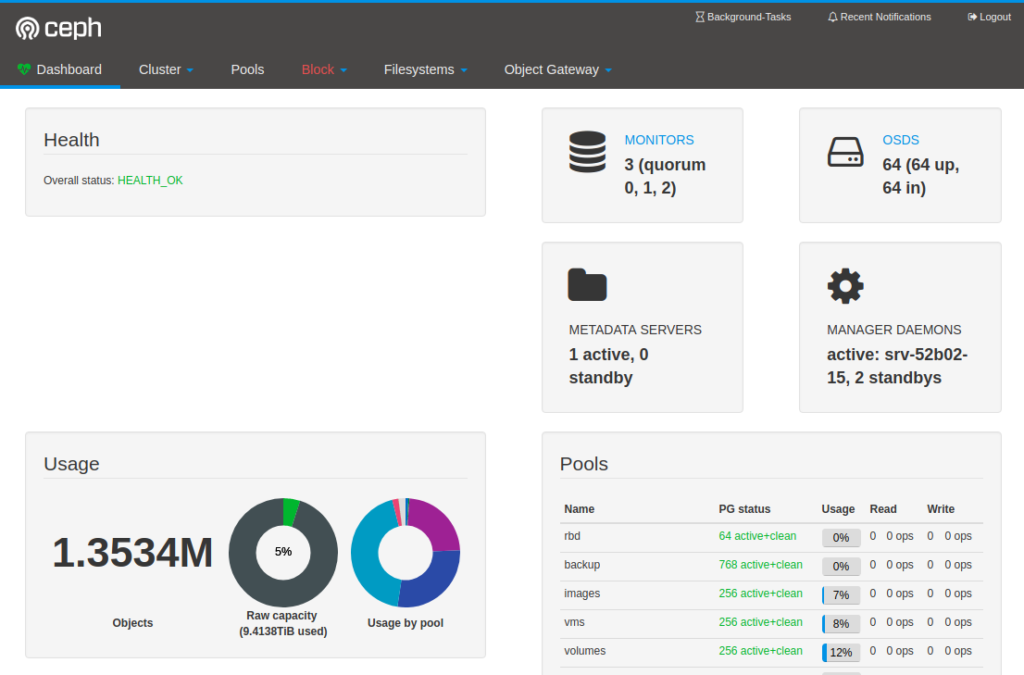

Modbus & Home Assistant

On my todo list is to connect the ISG web to my Home Assistant using the Stiebel Eltron integration.

The Modbus TCP/IP port 502 doesn’t seem to respond on my ISG, but this might be due to the stock firmware 12.2.3 build 260 it was delivered with.

I have sent an e-mail to Stiebel Eltron asking them for advice to have Modbus enabled on my ISG. Once I know more I will update this post.